AB / Multivariate Testing in Mailchimp

Do you ever use AB testing (sometimes called multivariate testing) in Mailchimp? It’s one of the tools you have at your fingertips to help make your marketing work harder for you, so if not, give it a go. It’s available in various areas of the platform.

What does it mean?

In short, you test 2-3 variables, see which one is the most “successful” (you decide on the definition of “success”), then roll the winning version out to everyone else. Here are some of the things you can AB test:

AB testing pop-up subscribe forms

Try different 2 different versions. Variations could be:

Different wording

Different lead magnets / downloads / offers

Data fields e.g. one asks for first name, one doesn’t

Design

Button CTA text

Corner re-open CTA text.

See which one generates the most conversions (i.e. ratio of views to subscribes) then roll out the winning version. Or test more variations to optimise even further

Multivariate testing for email campaigns

Traditionally called AB testing, but as you can try up to 3 versions, also referred to a multivariate. You can (and should) only test one variable at a time including:

Subject line

Sender name

Send time

Email content

It works by testing the variables on a percentage of your audience or segment so that each version goes to e.g. 10% x3 for 3 segments). Then the most successful version sends to the remaining 70% of your audience.

You can choose how long after the email is sent to measure success, after which the winning version will automatically send to the remainder e.g. after 4 hours or 24 hours. See my pro tips a bit further down.

You can select what is the measure of “success” e.g. open rate, click rate, revenue (if you have a connected ecomm store) OR select manual decision. If you read my blog last week about why reported opens and clicks can’t be trusted, it’s important to note that open and click rates are not necessarily accurate so use with caution.

Pro-tips:

I would suggest always allowing 24 hours for measuring the tests and before sending the winning version. People behave differently at different times of the day in relation to e.g. engaging for work vs personal , so what wins at 7am might not be the case at e.g. 1pm. For that reason I would also NOT send the first test email on a Friday as again, if the winning version goes out on Saturday, people behave differently on weekdays to weekends.

Choose manual selection to determine the winner. Mailchimp can only measure so much. It doesn’t know if e.g. you have phone enquiries, or replies to your emails, or bookings via another platform etc - factors that directly show the success of your campaign.

AB testing SMS

Are you sending texts through Mailchimp? If so, you can use AB testing to send two versions of texts in a similar way to email campaigns, albeit with less options.

Screenshot coming - it’s disappeared from my Mailchimp demo account today so I can’t show you at the moment!

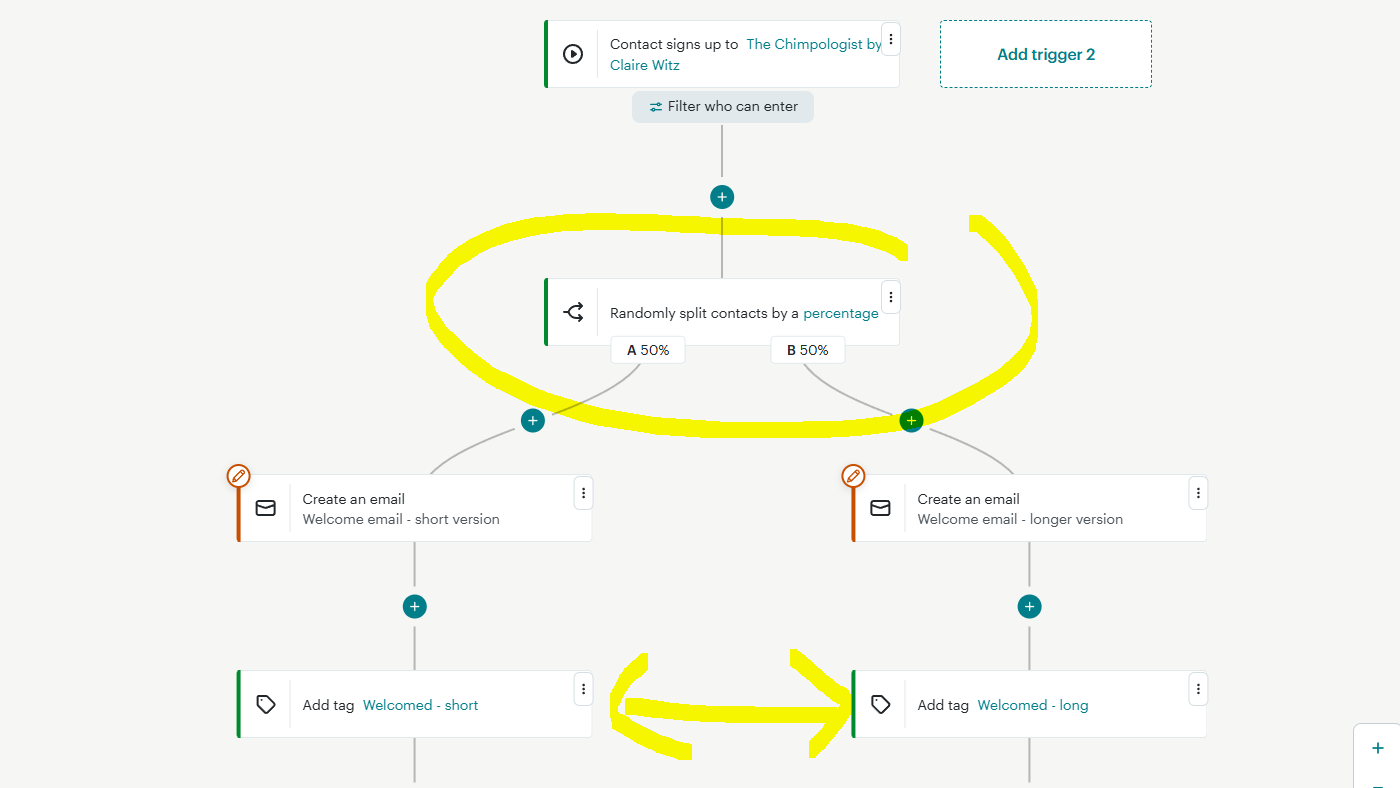

AB testing automations / customer journeys / flows

AB testing isn’t specifically included as an option in flows, but you can split the flow so that e.g. 50% of contacts go down one path and 50% down the other, then monitor performance manually over a period of time.

You could for example incorporate adding a different tag at the end of each path, then create a segment of e.g. Purchased in last 30 days AND has tag X or Purchased in last 30 days AND has tag y to assess impact.

-

Got questions? Need help? Just ask